Ease into productivity with Knative

Kubernetes-native serverless

Caleb Woodbine <calebwoodbine.public@gmail.com>

Introduction

- serverless compute

- Knative Serving and productivity

- helpful patterns for adopting Knative Serving

About me

based in Wellington, New Zealand. Software and infrastructure engineer. Cloud & Open Source enthusiast.

Background, Past and Present Projects

- Safe Surfer

- routers

- infrastructure

- ii.nz

- Kubernetes conformance

- sig-k8s-infra

- personal

- FlatTrack (software for humans living together)

Serverless compute?

What is it?

Focus and Abstraction

utility focused computing. Run (and pay) on demand and event-based.

Commercial History

a brief look at public cloud offerings

Google Cloud App Engine

A language-first Container as a Service

(2008)

AWS Lambda

A Function as a Service

(2014)

AWS ECS

A Container as a Service

(2014)

Google Cloud Run

A Container as a Service

Implements the Knative Serving spec

(2019)

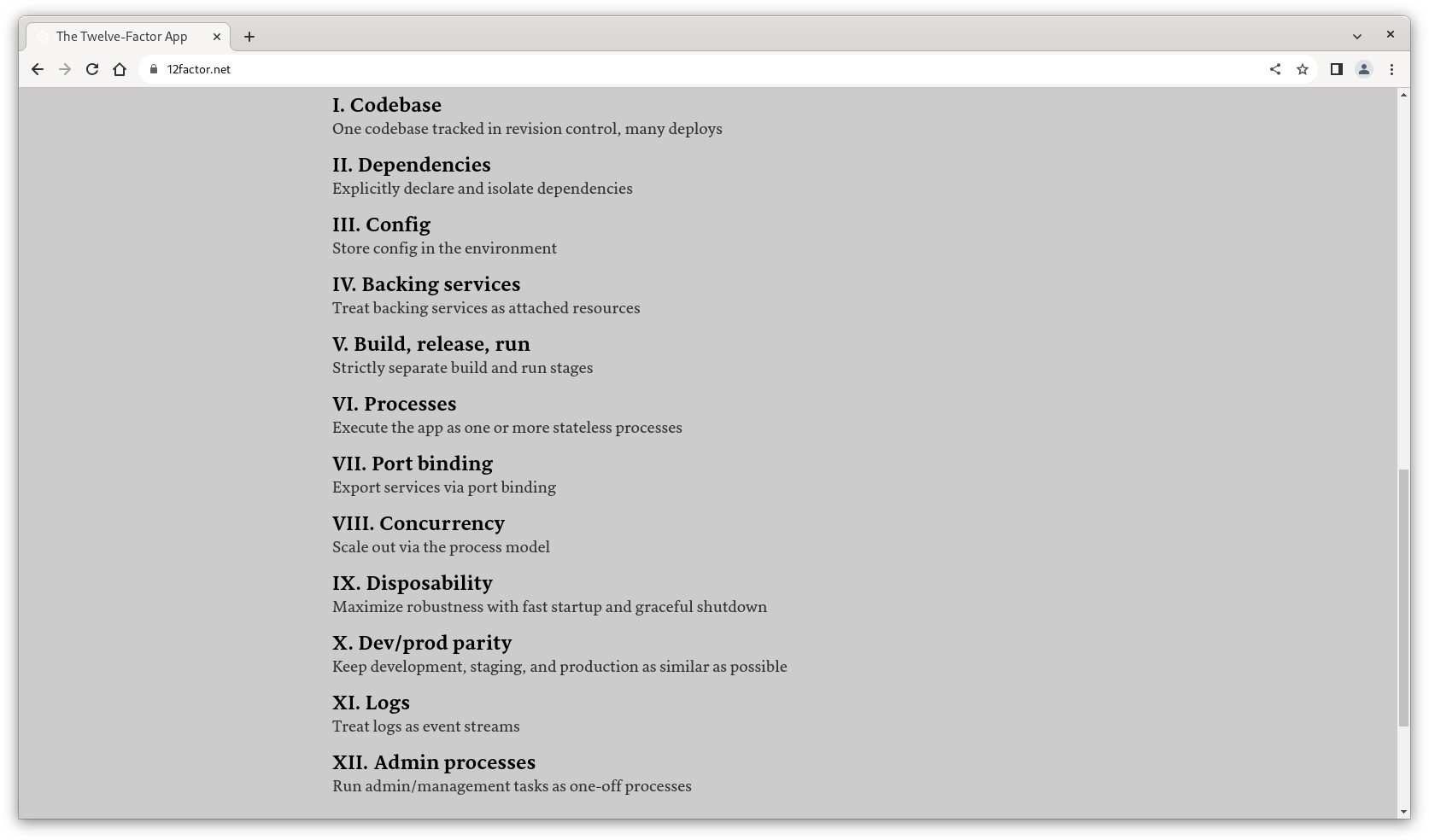

Stateless 12 factor apps

optimise your software for cloud and serverless environments

Deploying an app on Kubernetes

What you might do normally

Deployment

bring up the app

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

namespace: default # example purposes

spec:

replicas: 3

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- image: cgr.dev/chainguard/nginx:latest

ports:

- containerPort: 8080

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

Service

internally load balance

apiVersion: v1

kind: Service

metadata:

name: nginx

namespace: default # example purposes

spec:

ports:

- name: http

port: 8080

protocol: TCP

targetPort: 8080

selector:

app: nginx

type: ClusterIP

HorizontalPodAutoScaler

scaling based on CPU usage of services (basic)

apiVersion: autoscaling/v1

kind: HorizontalPodAutoscaler

metadata:

name: nginx

namespace: default # example purposes

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: nginx

minReplicas: 3

maxReplicas: 10

targetCPUUtilizationPercentage: 80

PodDisruptionBudget

ensure that a certain number of replicas stay live

apiVersion: policy/v1

kind: PodDisruptionBudget

metadata:

name: nginx

namespace: default # example purposes

spec:

minAvailable: 2

selector:

matchLabels:

app: nginx

Certificate

get a TLS cert for securing the traffic

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

name: letsencrypt-prod

namespace: default # example purposes

spec:

secretName: letsencrypt-prod

issuerRef:

name: letsencrypt-prod

kind: ClusterIssuer

commonName: "nginx.coolcoffee.company"

dnsNames:

- "nginx.coolcoffee.company"

Ingress

route the application through the ingress controller

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx

namespace: default # example purposes

spec:

rules:

- host: nginx.coolcoffee.company

http:

paths:

- backend:

service:

name: nginx

port:

number: 8080

path: /

pathType: ImplementationSpecific

tls:

- hosts:

- nginx.coolcoffee.company

secretName: letsencrypt-prod

Important pieces of plumbing but…

Could it be simpler?

Enter: Knative Serving

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

template:

spec:

containers:

- image: cgr.dev/chainguard/nginx:latest

a Service deployed with Knative.

Perspective

If Kubernetes is an electrical grid, then Knative is its light switch

– Kelsey Hightower, Google Cloud Platform

About Knative

Knative is a project out of Google.

It was donated to the CNCF in March 2nd 2022 as an incubating project.

see:

Scale to zero

No Pods

kubectl --namespace default get pods

No resources found in default namespace.

Request comes in

curl http://nginx.default.127.0.0.1.sslip.io

A Pod comes up

scales up to one

kubectl --namespace default get pods

NAME READY STATUS RESTARTS AGE

nginx-00001-deployment-7774b4cb55-vzlms 2/2 Running 0 4s

Timeout and the Pod is deleted

kubectl --namespace default get pods

NAME READY STATUS RESTARTS AGE

nginx-00001-deployment-7774b4cb55-vzlms 1/2 Terminating 0 92s

no requests have come in, in the last 90 seconds; the workload goes away

(after stability-window + scale-to-zero-grace-period)

No Pods

kubectl --namespace default get pods

No resources found in default namespace.

Revisions

for each update, a new immutible revision is created.

kn revisions list -n default

NAME SERVICE TRAFFIC TAGS GENERATION AGE CONDITIONS READY REASON nginx-00003 nginx 100% 3 31s 4 OK / 4 True nginx-00002 nginx 2 63s 4 OK / 4 True nginx-00001 nginx 1 2m32s 4 OK / 4 True

Traffic Splitting

split the traffic to a service back to separate revisions.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

traffic:

- latestRevision: true

percent: 90

- revisionName: nginx-00001

percent: 10

template:

spec:

containers:

- image: cgr.dev/chainguard/nginx:latest

Domains

default domains

configure using the Knative Operator

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

domain:

127.0.0.1.sslip.io: ""

svc.cluster.local: |

selector:

visibility: local

svc.coolcoffee.company: |

selector:

app: coolcoffee.company

cluster administrators set base domains for use

kn services list -n default

NAME URL LATEST AGE CONDITIONS READY REASON frontend http://frontend.default.svc.coolcoffee.company frontend-00001 5s 3 OK / 3 True nginx http://nginx.default.127.0.0.1.sslip.io nginx-00004 148m 3 OK / 3 True nginx-but-internal http://nginx-but-internal.default.svc.cluster.local nginx-but-internal-00001 64m 3 OK / 3 True

label-based

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: frontend

namespace: default

labels:

app: coolcoffee.company

...

will serve the service under svc.coolcoffee.company, as per earlier config

domain template

cluster administrators are able to configure the domain template in the following config, using Go text templating

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

network:

domain-template: "{{.Name}}.{{.Namespace}}.{{.Domain}}"

By default the Service domains are hierarchical.

internal/private by default

use internal or change to your domain.

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

domain:

svc.cluster.local: ""

Vanity Domains

a custom addressable web domain for the service.

in Knative cli

kn domain create coolcoffee.company --ref coolcoffee-company

with YAML

apiVersion: serving.knative.dev/v1beta1

kind: DomainMapping

metadata:

name: coolcoffee.company

namespace: default

spec:

ref:

apiVersion: serving.knative.dev/v1

kind: Service

name: coolcoffee-company

namespace: default

in your continous delivery repo.

claiming domains

a DomainMapping must be released to be able to route a Service.

apiVersion: networking.internal.knative.dev/v1alpha1

kind: ClusterDomainClaim

metadata:

name: coolcoffee.company

spec:

namespace: default

By default, this is a manual administrator step with creating a ClusterDomainClaim with

claiming domains automatically

automatically approved with

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

network:

autocreate-cluster-domain-claims: "true"

to ensure that only trusted domains are used.

Gradual Rollouts

set a custom amount of time before a new revision gains 100% of traffic.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

annotations:

serving.knative.dev/rollout-duration: "120s"

spec:

...

Port-detection

no need to specific port if the application listens on >=1024.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

template:

spec:

containers:

- image: cgr.dev/chainguard/nginx:latest # listens on :8080

Auto-TLS with Cert-Manager

automatically generate LetsEncrypt TLS certificates for Services using your ClusterIssuer.

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

...

network:

certificate-provider: cert-manager

auto-tls: Enabled

http-protocol: Redirected

default-external-scheme: HTTPS

certmanager:

issuerRef: |

kind: ClusterIssuer

name: letsencrypt-prod

Minimum Service scale

set the scale settings for Services

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

...

autoscaler:

min-scale: 2

Private services

manually specify visibility for a Service.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

labels:

networking.knative.dev/visibility: cluster-local

spec:

...

gRPC Services

port names as tags.

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: nginx

namespace: default

spec:

template:

spec:

containers:

- image: grpc-ping-test

ports:

- name: h2c # HTTP/2 cleartext

containerPort: 8080

Tag resolution

resolves and uses the digest for an image to pin the revision along side an exact image.

e.g: cgr.dev/chainguard/nginx:latest to cgr.dev/chainguard/nginx@sha256:cba7c1fa2f1007db182d61f2635f4be07cf01e55dd3362ca7413c3a555a61875

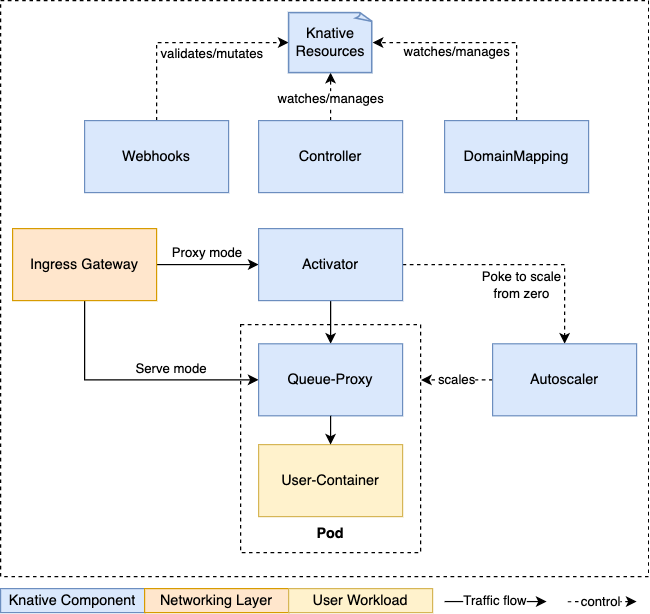

Knative Serving Architecture

resource mappings

Components (1/2)

core

- activator

- queues requests to Services for use in autoscaling

- autoscaler

- scales Service pods in response to metrics and incoming requests

- controller

- manages and reconciles Knative resources and their respective states

- webhook

- validates and mutates Knative responses

Components (2/2)

in Service Pods:

- queue-proxy

- proxies requests and collects metrics about the Service Pod

Components (2/2)

network layer options:

- Kourier

- Knative purpose-built ingress controller with Envoy Proxy

- Istio

- Ingress controller and Service Mesh

- Contour

- Ingress controller

Security Efforts

require non-privileged applications

the following

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

config:

features:

secure-pod-defaults: "enabled"

adds the following SecurityContext to the workflow container

...

securityContext:

allowPrivilegeEscalation: false

capabilities:

drop:

- ALL

security guard

uses a specific extended queue-proxy to audit the Service, allowing for detection and blocking of service misbehaviour

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

security:

securityGuard:

enabled: true

(not complete installation)

see more at: https://knative.dev/docs/serving/app-security/security-guard-about/

mTLS (alpha)

an optional feature to encrypt internal traffic between between ingress, activator and queue-proxy components.

Downsides

- cold start penalties (varies based on compute and components: e.g: runc/crun)

- only supports HTTP/HTTPS/gRPC traffic

Positive side-effects

- greener compute scheduling through demand-based scheduling

- same APIs where you need them, whether in data center, on-prem or local machine

In the wild

commercial offerings include

- Google Cloud Run

- Red Hat OpenShift Serverless

- Chainguard managed offering (Hakn)

- IBM Cloud Code Engine

- TriggerMesh

Helpful pattens for adopting Knative

Platform teams

- knative-operator

- manage Knative easier

- fluxcd or argocd

- deliver Kubernetes-native infrastructure as code continously

Developers

kn quickstart kind

create a service with kn cli

kn -n default service create nginx --image=cgr.dev/chainguard/nginx:latest

creating an app with the kn func cli

create the small boilerplate

kn func create --language go --template http

write a handler

package function

import (

"context"

"fmt"

"net/http"

)

// Handle an HTTP Request.

func Handle(ctx context.Context, res http.ResponseWriter, req *http.Request) {

fmt.Println("request in!")

res.WriteHeader(http.StatusOK)

res.Write("OK")

}

deploy the function

kn func deploy

Knative is Open Source and Community Driven

Come join us at

Knative Serving Demo

Questions

(end)